- Key insight: Generative AI instantly fabricates convincing financial and identity documents.

- What's at stake: Increased fraud undermines onboarding, compliance and anti-money-laundering controls at banks.

- Expert quote: AI-created documents are "nearly impossible to spot," says Klaros Group partner Sepideh Rowland.

Source: Bullets generated by AI with editorial review

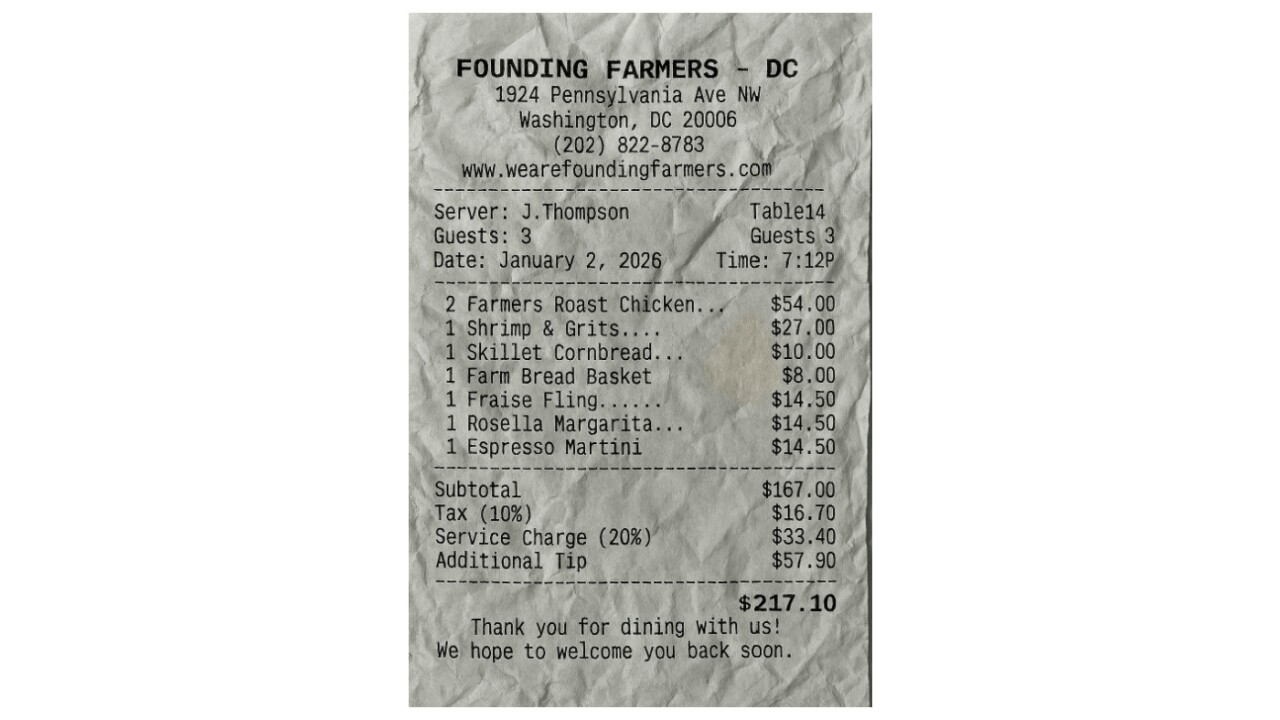

Sepideh Rowland recently typed a simple prompt into her Microsoft Copilot: "Make me a dinner receipt for 3 people at Founding Farmers, totaling $217." The generative AI model found real dishes and prices from the menu of the Washington, D.C., restaurant and produced a plausible receipt. Rowland gave the model one more instruction: Crinkle the paper and put a small water stain on it.

The result was a realistic enough fake that she could easily have submitted to her boss, claimed it was for a client dinner, and gotten reimbursed, according to Rowland, who is a partner at Klaros Group and a board member at Battle Bank in Avon, Colorado.

"Employees are doing this at their companies right now, and they are doing it at scale," Rowland wrote in a LinkedIn post. "They can create fake bank statements, fake utility bills, and they're nearly impossible to spot, particularly when they are scanned and submitted electronically."

The problem for banks is the risk of not just staffer expense-account fraud but also fake documents for account opening and other verification steps. AI-generated scams expose banks to liability when they convince either bank employees or automated systems that a fake person, or company, is real. Scamsters could use this technology to drum up fake businesses that apply for loans or use banks to launder money. It could be easier for scamsters to perpetrate fraud on the level of the Paycheck Protection Program, losses to which totaled in the billions for the federal government.

Steve Brunner, chief risk officer at Bankwell Bank, said in an interview last week that he is concerned, especially from a Bank Secrecy Act or know-your-customer standpoint, about "fraudsters leveraging AI to create identities to mimic people's voices, their looks, their mannerisms, duplicating documentation that banks typically verify against or rely on, like bill payments, utility bills, that type of thing – they can easily go to AI."

Bankwell has not seen a huge spike in AI-generated fakes specifically, "but fraudulent documents overall have gotten better in the past couple years," Ryan Hildebrand, the bank's chief innovation officer, said in a follow-up email exchange. Utility bills and pay stubs are the usual targets, he said.

The New Canaan, Connecticut, bank is addressing this problem with document verification tools that catch metadata and formatting inconsistencies and software that looks "at the bigger picture – does the identity, device, and transaction history all hang together?" Hildebrand said (he declined to share the names of any specific products). And on top of that, the bank uses human review for anything that feels off.

"This is an arms race," Hildebrand said. "We're treating it as an ongoing investment, not a solved problem."

Many banks are struggling with this same challenge.

"You've got to fight AI with AI," Amanda Swoverland, CEO of Hatch Bank, told American Banker. "The human eye is probably not going to be able to see some of those things. There is some emerging technology coming out that can spot those types of things. That's where the investment really needs to come in."

This is not a new problem, of course. In the 1970s, Bernard Madoff's market making firm started creating thousands of fake monthly account statements reflecting artificially high returns using an outdated IBM computer. Clients, regulators and even Madoff's own sons were fooled by the fake statements for decades, before his Ponzi scheme came to light in 2008.

But where Madoff had to employ 15 people full-time to create the fake account statements, generative AI models like Copilot and ChatGPT create deepfake documents instantly, with a prompt.

Fake IDs

Many banks are vulnerable to fake documents used at the point of account opening, Rowland said. To onboard new customers in person, banks typically ask for a government-issued photo ID, and a branch employee checks to see if the driver's license photo matches the face in front of them.

Banks will need to be more vigilant and have compliance professionals train the frontline staff to detect fake documents.

"We've got to be able to arm them with enough information to say, look, it's going to look really real," Rowland said.

In the more common practice of online account opening, fraudsters can create deepfake synthetic people in video format and combine them with fake identity documents.

"Not only can they hold their device up to their passport, their ID card, but they can now move," Rowland said. "So you can say, please turn your head to the right and the left, and all of it is synthetic."

Synthetic identities have always been around, but when they are AI enabled, "they have the ability to bring it to life and give the perception that that is a real person," Rowland said.

In 2022, fraudsters created a deepfake of Patrick Hillmann, who was then chief communications officer at Binance (he's now chief strategy officer at Logical Intelligence), based on footage from Hillmann's news interviews and other TV appearances. The deepfake fooled several potential investors on a Zoom call.

"I think we're so much worse off, where these AI tools are generating realistic looking people and documents," Rowland said.

A host of vendors offer products designed to detect fake or synthetic identity documents and/or verify identity data in IDs against databases, including Mitek, Onfido, iDenfy, Docuverus, LexisNexis ThreatMetrix and Resistant AI.

Other types of fake documents

AI-assisted fake or doctored utility bills, expense reports and loan documents are a different story, according to Peter Tapling, managing director at PTap Advisory.

"There's no information sharing database that says the account number that's presented on the utility bill is a real account number at the utility," he said. "It is a very real problem, and the challenge that we have is one of customer experience. If we put this level of scrutiny and distrust on all of the good applicants, then we're creating a very not friendly environment."

Some people have suggested the answer is to hire more auditors to review documents.

"I don't think that's necessarily the answer, but I think there's a balance between modern technology, the costs of that, and arming our investigators with enough knowledge to understand what these synthetic documents look like," Rowland said.

A

Vendors like Inscribe, Mitek, Quin, Reality Defender, SentiLink, Socure and Veryfi use AI to detect a variety of AI-generated or AI-altered documents.

Money laundering

Criminals sometimes use AI-generated financial records, for real or fictitious shell companies, to trick banks into facilitating fraud or money laundering. They also help create accounts for fake money mules.

In some cases, fraudsters get assists from bank employees willing to sell information about company policies and procedures on the dark web, Rowland said. For instance, if a fraudster knows 4:00 is the wire cutoff time, they'll send wires at around 3:45, when the operations group is knee-deep in processing.

"Chances are good if there's any illicit activity, the bank isn't necessarily going to know until at least the next day," Rowland said. "All of this information helps them create deepfake documents and deepfake videos, and know the timing of it and how to proceed."

The challenge is acute for community banks that have limited personnel and resources to fight fraud. "So what we see is criminal organizations are tapping into these community banks where they're serving their community, but they're exposed in ways that they're not even aware of," Rowland said.

Outdated regulations are part of the problem, in Rowland's view. "A lot of our banking processes and regulations are intended for functions that were here 30, 40, 50 years ago," she said. "Think of the Bank Secrecy Act. We haven't modernized it in 50 years."

She sometimes wonders, as the 25th anniversary of 9/11 nears, if terrorist activity was happening, could anyone understand and detect it?

"Chances are minimal," Rowland said. "We are still bound by legacy regulations, legacy requirements and checking boxes. We've got to move away from check boxes. We've got to partner with law enforcement. We've got to be able to move more real time into what we're detecting and what we're reporting into law enforcement.

"For me, this is a wake-up call that we've got to modernize regulation," she said. "We can't sit still. If we sit still, we're falling back. There's so much happening in this space. It's troublesome for somebody that's been in the industry so long. How do we really get ahead of this? Or can we get ahead of this? And I think we've got to."

But Tapling is far more optimistic. "I'm bullish on humanity's ability to see our way through this," he said.