Banks and credit unions are universally worried about fraud, but are also concerned that security tools don't adequately protect underlying data.

For Flushing Financial's John Buran, the benefits of bank automation are clear, but so are the fears.

The CEO of the $8.8 billion-asset Flushing Financial told American Banker that automation systems are poised to oversee "vast amounts of personal and financial data," but create questions surrounding "consent, data handling and storage."

"Although automation brings efficiency and innovation benefits, the concerns about data security and privacy risks in banking automation are in my opinion well founded and should remain a critical area for continuous focus and improvement," Buran said.

Buran is not alone. Worries about data security and privacy are holding many back from using advanced automation such as artificial intelligence, according to new research from American Banker.

"Fraud teams are already overwhelmed by the number of alerts they are investigating, so many have to focus on the high-dollar losses and manage lower-dollar losses through their dispute processes," said John Meyer, managing director in Cornerstone Advisors' Business Intelligence and Data Analytics practice.

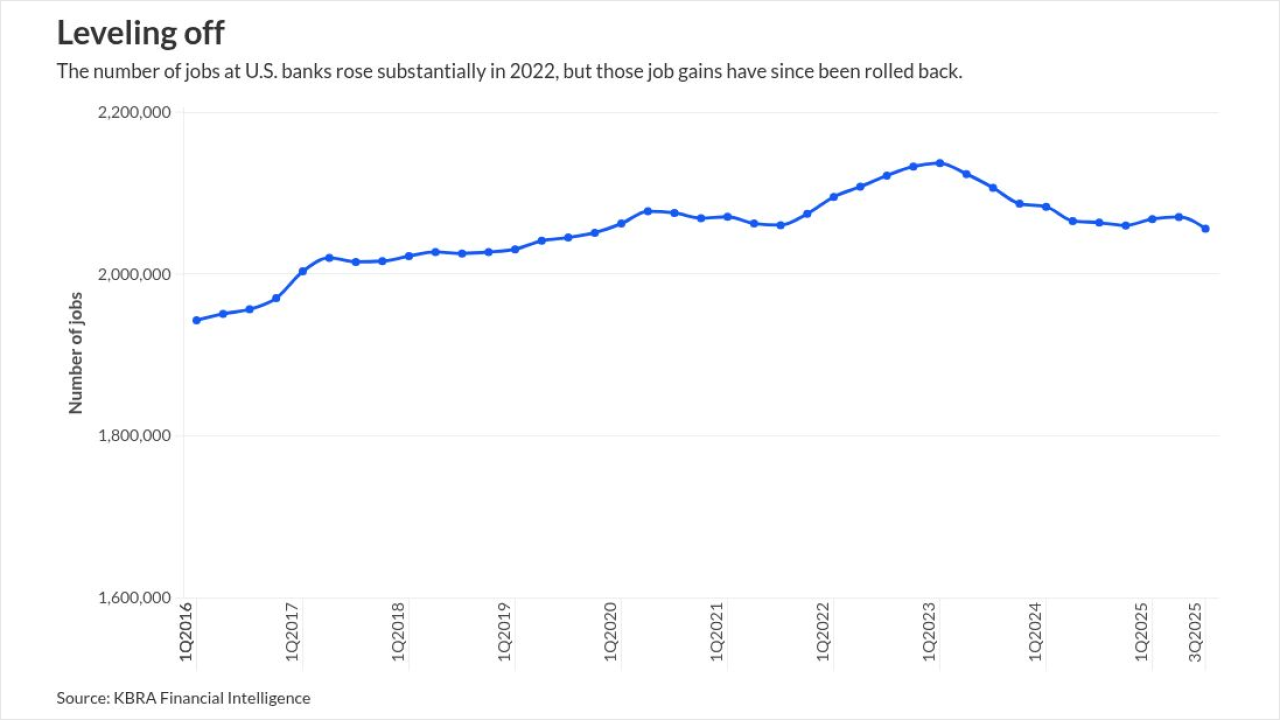

Bank automation by the numbers

American Banker's 2025

PayPal and Visa have both

But for 56% and 50% of those working at credit unions and banks with between $10 billion and $100 billion of assets respectively, concerns surrounding data security and privacy were the largest barrier preventing the institutions from going further with integrating tools for automation.

Going slow with AI

At the $8.2 billion-asset

"[Currently] automation really has been pushed from a tech perspective, so the tech people are saying, 'this is amazing, you should use this,' and the business people aren't understanding how to utilize it," said Ben Maxim, chief technology officer at MSU Federal Credit Union.

"But this is no different than when the web came out or when mobile came out," Maxim said. "It took years, and the tech was there, it was ready [and] it could do all the things we wanted to do, but until the business folks understood how it could be utilized and what could happen with it, there wasn't either the support for or the understanding of how it could actually be used to benefit the credit union."

Further data from American Banker found that across all respondents, 61% reported that business-oriented executives including chief executives and chief financial officers were the

Larger banks have also kept these considerations in mind, and continued to forge ahead with preexisting and new efforts for automation.

This year, Wells Fargo debuted its plans to

Others like Goldman Sachs, which gave 12,000 developers copilots from Github last year and recently began offering all 46,000 employees access to a

In the background of all this tech adoption, cybersecurity experts are preaching the importance of proper data security measures.

When it comes to collecting consumer information, there used to be "bright lines between application security and operations security" where executives could clearly distinguish which efforts were for "making products more secure" versus "making the back office more secure," said Joshua McKenty, chief executive and co-founder of Polyguard.

"When we start seeing these interactions of 'we want to collect and analyze data about our users to reduce fraud,' [organizations] are therefore raising [their] own profile as a target," and it isn't always a definite that the framework for securing that information is wholly sound, McKenty said.

What to do for better security

So what can financial leaders do to both strengthen data security and promote the adoption of automation?

Parijat Sinha, head of open banking products at FIS, said that focusing more on products that are tailored to "devices and behavioral patterns" as opposed to "individual identifiable attributes" when building automation frameworks can "reduce anxiety around data retention while simultaneously decreasing the risk of agentic or generative AI systems circumventing biometric-based defenses."

"This approach to decisioning that avoids identifiable personal attributes creates a more robust security framework that is inherently more resistant to AI-driven attacks," Sinha said.

Executives like

Beyond data structuring, corporate understanding of automation and product selection, the culture of an organization is a significant factor that can impact how effective adoption is, both short and long term.

"As the industry is becoming increasingly automated, all financial professionals should consider at least two questions: How do you want your team to use AI in their day-to-day work (if at all); and are your governance practices consistent with that goal," Azish Filabi, managing director of the American College Cary M. Maguire Center for Ethics in Financial Services, said.