Banks have been using generative AI in their

But for the most part, U.S. banks have been wary of offering a gen AI-driven chatbot to their customers. The risks are grave: Large language model bots can be

In an initiative they're launching Monday, cloud provider Google, consultancy Oliver Wyman and AI testing platform provider Corridor Platforms are offering banks a responsible AI sandbox to test the use of any gen AI, with advice available from Oliver Wyman advisors. The sandbox will be free for three months. (Separately, Corridor's platform can run in any cloud environment.)

"The idea is, in a three month period, they really get a good sense of exactly what needs to be done for their use case to go live and what governance is needed, and eventually, get comfort that it is responsible and a good thing for their customer, and hopefully will also meet regulatory muster," said Manish Gupta, founder and CEO of Corridor Platforms and former technology leader at American Express.

The sandbox addresses some bank regulations, Gupta said. For instance, it provides bias tests, accuracy tests and stability tests.

At the end of the three-month period, companies will be able to move the newly trained gen AI models to their own environments. "We will make it very portable," Gupta said.

Sandbox concept gaining traction

The largest, most sophisticated banks could build their own sandboxes, and some are doing so.

"Tier 1 banks have been using sandboxes with good results – for example, HSBC and JPMorganChase," Alenka Grealish, a lead analyst at Celent, told American Banker.

The advantage for banks of using a third-party-provided sandbox to test AI tools, rather than doing their own testing in-house, is that "it gets them probably three years ahead on the journey, because the Corridor team has built out the capabilities that are needed," said Dov Haselkorn, a consultant who until a month ago was chief operational risk officer at Capital One. "Therefore, you can leverage the R&D and the thought and the development that they have been putting into it for years now overnight. And speed is really of the essence, especially in this domain, where everybody is fighting to roll out new capabilities immediately. Sure you could build it, but if it's going to take you three years to build it, you're going to be behind the curve."

Capital One is not using the responsible AI sandbox yet, but the bank has had discussions with the companies behind it, Haselkorn said.

In addition to improving speed, use of a sandbox could also mitigate risk, such as the danger of a chatbot saying something wrong to a customer, Haselkorn said.

"Data risk and third-party data sharing risk are extremely relevant to this type of use case," Haselkorn told American Banker. "Data provenance is a major topic in this field. So if you're building your own models, especially if you're using web-scraped data or data from more recently established datasets that are commonly used to develop open source models, there can be compliance questions with the use of copyrighted data. If you source data from, say, Bloomberg, you're allowed to use it for some things, you're not allowed to use it for other things. You can't turn around and commercialize that data, for example."

In his previous role at Capital One, Haselkorn put a lot of effort into making sure the company was complying with its own agreements, as well as protecting customer data. "If we're using our own customer data to build our own models and then we're deploying models on platforms like Corridor's, we have to make sure that those controls are in place to make sure that none of our customer data accidentally leaks to third parties."

How the new sandbox will work

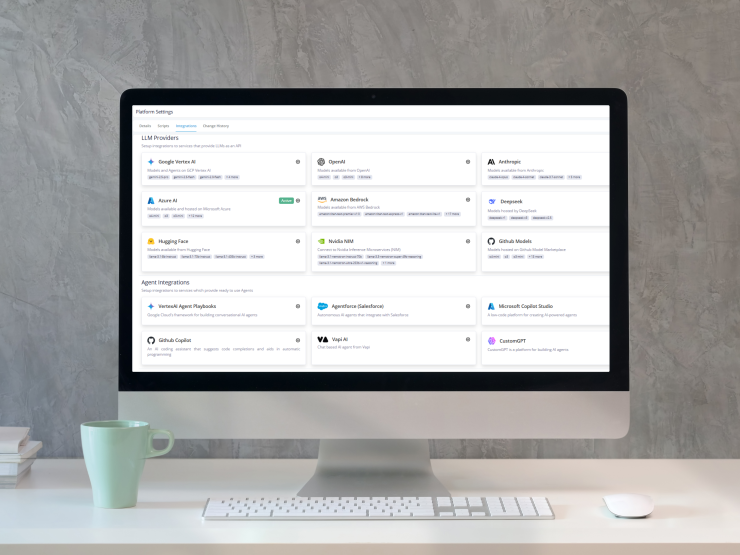

To start with, the responsible AI sandbox will contain a version of Google's Gemini generative AI model, trained in customer service, that bankers can test within Google Cloud. Bankers can also swap in other gen AI models, connect to internal or external data sources via API and see how these models would handle their customer questions.

"In this industrial revolution for knowledge workers, the industry needs to learn how to control" the growing adoption of generative AI in their firms, said Michael Zeltkevic, managing partner and global head of capabilities at Oliver Wyman. This requires solving hard engineering problems, such as how to deal with

Oliver Wyman will offer banks help with using the sandbox and getting gen AI models into production in their own data centers and cloud instances.

A similar sandbox in the UK

The AI sandbox idea has been popular in other countries. For instance, in the U.K., the banks' regulator, the Financial Conduct Authority, said in September it would launch a "Supercharged Sandbox" to help firms experiment safely with AI to support innovation, using NayaOne's digital sandbox infrastructure, which runs on Nvidia hardware. "This collaboration will help those that want to test AI ideas but who lack the capabilities to do so," said Jessica Rusu, the FCA's chief data, intelligence and information officer.

"The U.K.'s Financial Conduct Authority has recognized the value of leveling the playing field by offering an AI sandbox," Grealish said.

The FCA sandbox has received about 300 applications, according to Karan Jain, CEO of NayaOne.

One reason companies use NayaOne's sandbox is to train an AI model while keeping data from being shared with third parties, Jain said. For instance, one insurer uses the platform to train an AI model with its claims photos.

"There's no way an insurance company sends claim data to an AI company, and there's no way an AI company gives its model to an insurance company," Jain said. "They brought the data and the AI model into NayaOne, we locked it down, and we provided the GPUs needed to do that work. We also have all the Nvidia software available through our platform."

U.K. banks use the FCA digital sandbox for such purposes as fraud detection and cybersecurity, Jain said.

The four big enterprise generative AI use cases NayaOne sees in its sandboxes outside the FCA initiative are assisting customer service representatives in contact centers, aiding developers in writing and updating code, back-office efficiency (for instance, using it to extract data from PDFs), and compliance checks.

"You have a secure environment where data doesn't leak," Jain said. "And you can bring one person or multiple teams together to learn, adapt, build — where the industry is stuck right now, in my opinion."

One bank spent 12 months onboarding AI coding assistant Lovable and making it available to a small group of people, Jain said. By the time it was ready for use, newer coding assistants like Cursor had come out.

"That's a really practical challenge that banks are facing: The speed of adoption of technology is 10 times slower than the speed of technology that's entering the market and the employees want it," Jain said.