- Key insight: Ramp connected petabyte-scale unstructured customer data to enterprise LLMs.

- What's at stake: Misclassification risks could trigger litigation, regulatory scrutiny, and reputational damage.

- Forward look: Expect broader LLM integration alongside stricter data-governance and legal discovery expectations.

Source: Bullets generated by AI with editorial review

NEW YORK — Ramp, a New York City fintech, had a goal common to banks and businesses of all kinds: It wanted to democratize the use of AI, and let people throughout the company query company data of all kinds.

"Everyone at Ramp wants to do analysis," Ian Macomber, head of analytics at Ramp, told American Banker. "They all want to leverage the power of AI. I think there's latent demand at a lot of businesses to answer questions easily, cheaply and accurately, and not necessarily have to go through a data team to answer your question."

But, as is the case with many companies with similar goals, Ramp had a data challenge: The information needed to answer questions lived in silos and in diverse formats, including sales call transcripts, customer emails, customer service tickets, purchase orders, invoices, receipts, memos and expense policies.

Data obstacles like this one have tripped up many banks' efforts to provide personalized recommendations or useful virtual assistants, according to Sameer Gupta, financial services AI leader at EY Americas.

"It's the data," he told American Banker in a recent interview. "Most of us have found our banking bot can get stumped very quickly after the second question. It can basically give you your bank balance," and after that has to refer the customer to a human agent.

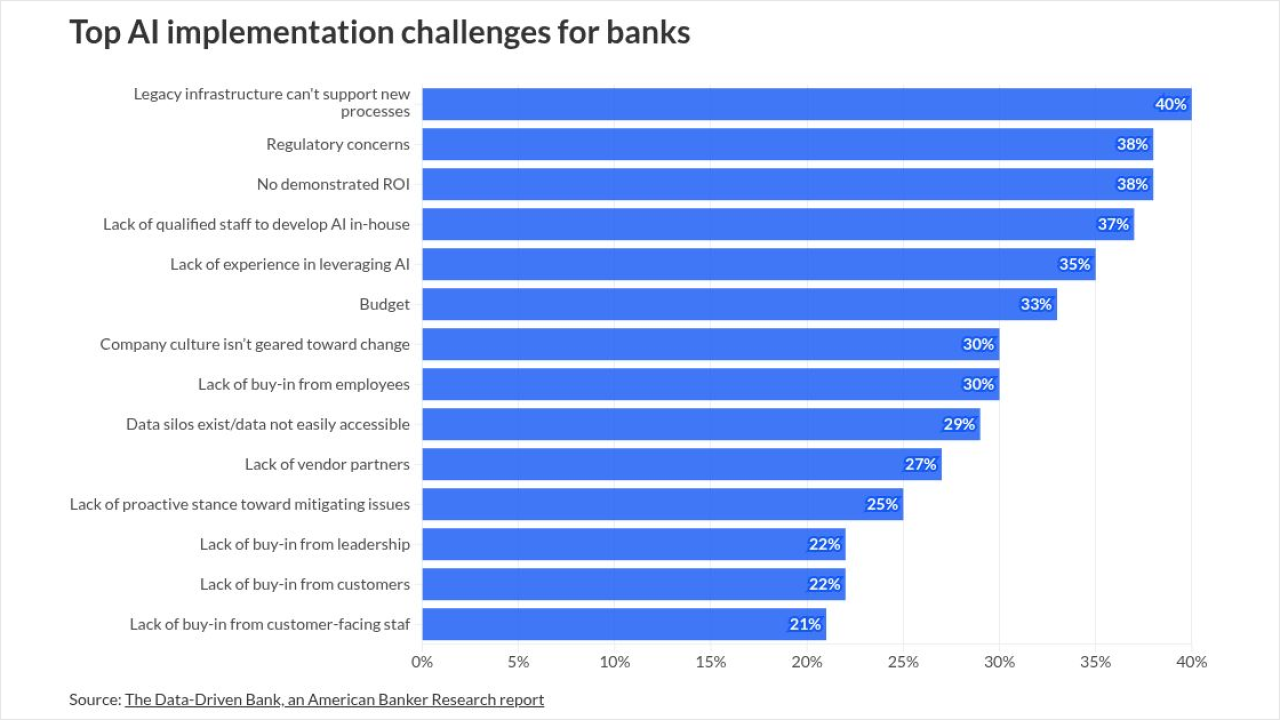

In an American Banker

At Ramp — which provides corporate cards, bill payment and other services to businesses — a valuable trove of customer data lives in sales call transcripts. The sales team has hundreds of thousands of customer calls every year, all of them 30 minutes to an hour long, according to Macomber. The transcripts of these calls contain useful narrative, text and instructions.

Another useful data source is emails from customers and customer service tickets, of which Ramp also receives hundreds of thousands a year. And much needed unstructured data is contained in documents such as purchase orders, invoices, receipts, memos and expense policies.

All told, this trove amounts to a massive cache of data in the "low petabytes" — that is, the low quadrillions of bytes — of data, Macomber said.

To make all this data queryable by an AI model, Macomber's team fed it into Snowflake's Cortex AI, a cloud-based data platform, using Snowflake's application programming interfaces and its Model Context Protocol Server that connects data sources to large language models and applications from Anthropic, CrewAI, Cursor, Devin by Cognition, Salesforce's Agentforce, UiPath and Windsurf.

Ramp's primary use case for the system today is analyzing customer data to understand customer complaints.

"At Ramp, our mission is to help businesses save time and money," Macomber said. "A critical part of that is deeply understanding their needs from the thousands of pieces of feedback we receive."

Ramp's product teams can ask questions of the Snowflake cloud in natural language and get immediate answers.

Several banks similarly use AI to analyze the customer feedback contained in emails, calls and other channels.

Any regulated company looking to use AI to analyze customer feedback needs to be mindful of a few risks, according to Greg Ewing, an attorney with law firm Dickinson Wright.

One risk is that the technology can make mistakes, Ewing said. Missing a customer complaint is more of a business problem than a legal liability, he said.

"The other concern would be that you analyze something incorrectly, so the AI tells you it's X and it's actually Y," Ewing said. If this information is presented on a website or used to inform consumers about services, potential legal issues of false advertising or misrepresentation of capabilities could arise."

Another liability could arise from the discovery process of a legal case. An attorney could ask for all feedback the company has received or all response summaries its AI system generated.

"If I'm a litigator trying to prove that some mistake was made or financial fraud occurred, and I say, 'Look, you've known about this issue for a year and you didn't do anything about it because you use this AI tool that is giving you bad results, you're negligent, you are clearly in breach of your obligations or your contract,' — I think there [are] potential claims there."

At Ramp, "people are asking a lot more questions than they would when previously they had to go tag someone on the data team," Macomber said. "So these might be questions like, 'How many Ramp businesses are in Ohio? What are the Ramp businesses that are in Ohio? Give me an example of 10 construction businesses.' They're not necessarily deep analysis, but they're really relevant for a salesperson who's on a call with a construction business in Ohio and they need that information right now, and getting that information five minutes from now, the moment's already gone."

The system can also be used to "attack deeper questions," such as, "What are the top sales blockers right now where we really need to prioritize, because we're hearing it over and over on calls and emails?" Macomber said.

Before the new system was put in place, questions like this would have taken machine learning engineers a couple of weeks to figure out how to answer. "It's really democratizing access to the entire company," he said.

To protect customer data privacy, Ramp does not put any personally identifiable data into the Snowflake cloud.

"If you are doing analysis, there is not a reason for you to have full Social Security numbers," Macomber said. "There is not even a reason for you to have the last four, so we don't put that data into Snowflake."

The new system has resulted in more employee use of and demand for AI, he said.

"Say there's a two-lane highway with traffic on it. So you build another four lanes and there's still traffic," Macomber said. "The reason why is, if you make it easier to drive, more people will drive. As we have made it easier to ask questions, as we've made it easier for people to analyze unstructured data and see themes across expense policies and calls and customer service tickets, more people have done that."